The Technology

AI That Reads Your Body

Today's AI requires you to type, click, or speak to communicate. Our patent-pending technology lets AI understand you through your physiology—creating content that responds to your emotions in real-time.

The Control Surface Problem

Think about how you interact with AI today. You type prompts. You click buttons. You speak commands. These are control surfaces— crude interfaces that force you to consciously articulate what you want.

But what about what you feel? What about whether the AI's output is actually working for you? Today's AI is blind to your emotional and physiological response.

Our patent application enables a fundamentally different interaction: AI that understands you by reading your body.

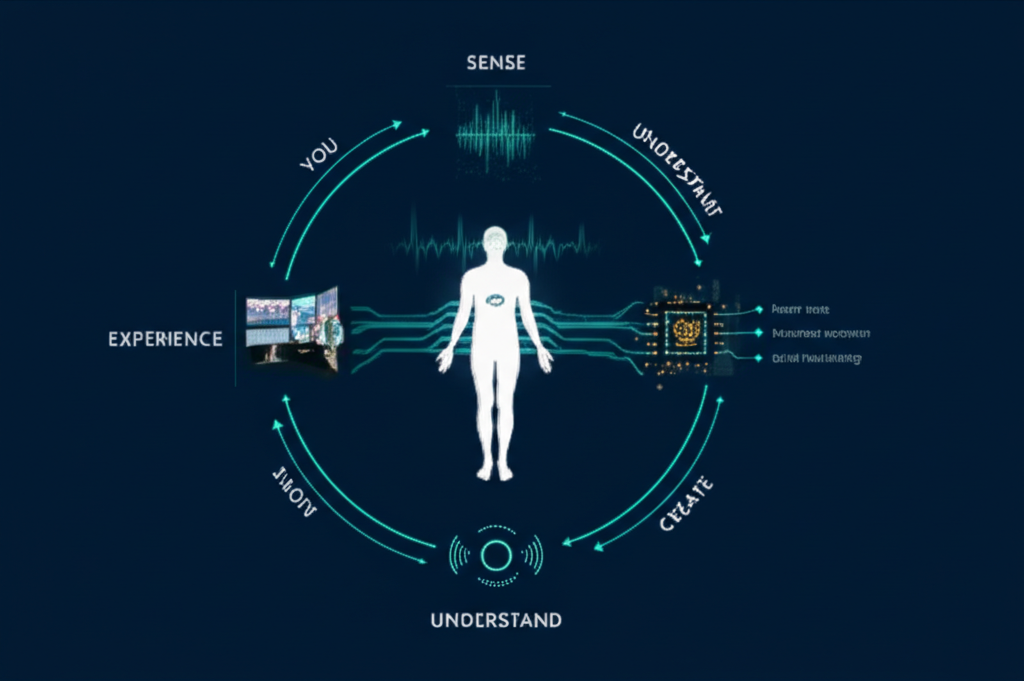

The Closed Loop

A continuous feedback cycle between your physiology and AI-generated content.

You

The human at the center of the experience

Sense

Biometrics captured continuously

Understand

Deviation from intended state computed

Create

New content synthesized in real-time

Experience

Content delivered, loop continues

Why This Matters

Imagine asking AI to create a personalized sci-fi thriller. Today's generative AI can do that. But it creates the film once, with no idea whether you're actually scared, excited, or bored while watching.

What if the film changed every second based on whether you're on the edge of your seat?

1 Today: Blind Generation

AI generates content based on your prompt, then you watch passively. The AI has no idea if it's working. You're just an audience.

2 Nourova: Responsive Generation

AI reads your biometrics continuously, understanding your emotional response. Content evolves moment-by-moment to optimize your experience.

Reading Your Body

Your body constantly broadcasts your emotional state through dozens of signals. Our system reads these signals to understand you without requiring you to articulate anything.

These signals are far more honest than what you might type or say. Your body doesn't lie about whether you're scared, excited, or bored.

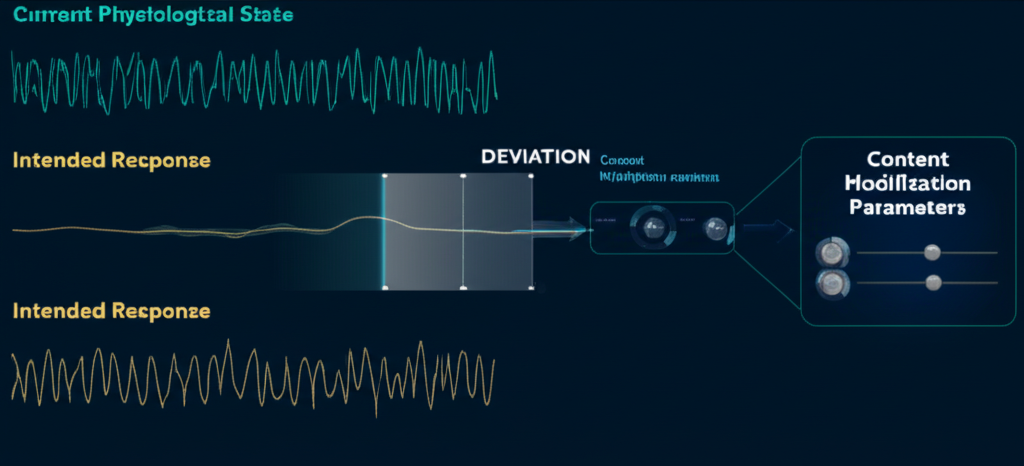

Understanding the Gap

The core innovation is computing the deviation between your current physiological state and the intended response.

If you're watching a thriller and you're supposed to be tense, but your heart rate is steady and your skin conductance is low—the system knows the tension isn't landing. It can generate content that builds the suspense more effectively for you.

This deviation drives real-time modification of:

- Pacing and rhythm of content

- Intensity and emotional tone

- Visual parameters (lighting, color, camera)

- Audio characteristics (tempo, key, dynamics)

- Narrative direction and complexity

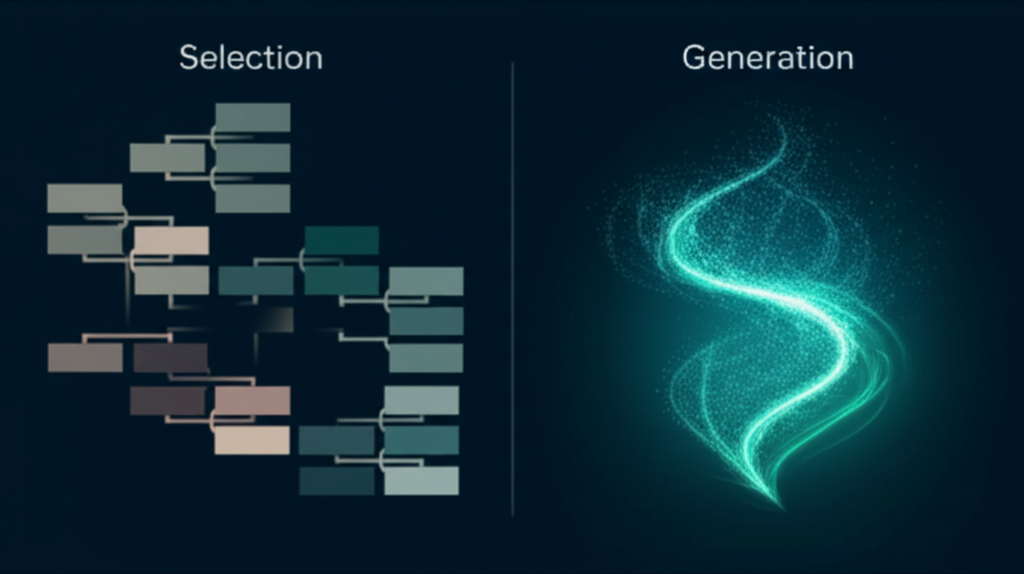

True Generation, Not Selection

This is the critical distinction. "Adaptive" systems today select from pre-made options. Our technology generates content that never existed before.

Selection-Based "Adaptive"

- • Branching storylines (choose your path)

- • Difficulty sliders (easy/medium/hard)

- • Playlist algorithms (pick next song)

- • Content already exists, just picked

The content was made for everyone. You just get shown different pieces.

True Generative Synthesis

- • Narrative created in real-time

- • Music composed for this moment

- • Visuals rendered for your state

- • Content didn't exist until now

The content is made for you, right now, based on your body's response.

Patent-Pending IP Highlights

A plain-language summary of what the claims are directed to in Application 18/330,111. This is a high-level overview, not a substitute for the claims.

Independent Claim Themes

- • Real-time generation during playback using a generative AI model.

- • Second content segment is newly generated, not pre-chosen, not pre-made, and not assembled from preexisting assets.

- • Content updates are driven by a deviation between biometric state and an intended physiological response.

- • Continuous stream output so the user experiences a seamless narrative.

Dependent Claim Extensions

- • Multi-modal biometrics and multi-user weighting for group experiences.

- • Multi-sensory outputs via auxiliary actuators (haptic, olfactory, thermal, lighting, audio spatialization).

- • World-model or physics-engine generation with real-time simulation parameter updates.

- • Natural-language request parsing and intent-to-content mapping.

Multi-Sensory Output

Generated content can be delivered across multiple channels to create truly immersive, responsive experiences.

Visual

Screens, VR, AR, projections

Audio

Spatial audio, adaptive music

Haptic

Vibration, pressure, texture

Ambient

Room lighting, environment

Thermal

Temperature modulation

Vestibular

Balance, motion feedback

Olfactory

Scent delivery

Multi-Modal

Combined sensory output